SAN Design Best-Practices for IBM SVC and FlashSystem Stretched and Hyperswap Clusters

I recently worked with a customer who had their SAN implemented as depicted in the diagram for a Hyperswap V7000 cluster. An SVC or FlashSystem cluster that is configured for Hyperswap has half of the nodes at one site, and half of the nodes at the other site. The I/O groups are configured so that nodes at each site are in the same I/O group. In the example from the diagram, the nodes at Site 1 were in one I/O group, the nodes at the other were in another I/O group. A stretched cluster also has the nodes in a cluster at two sites, however each I/O group is made up of nodes from each site. So for our diagram below, in a Stretched configuration, a node from Site 1 and a node from Site 2 would be in an I/O Group.

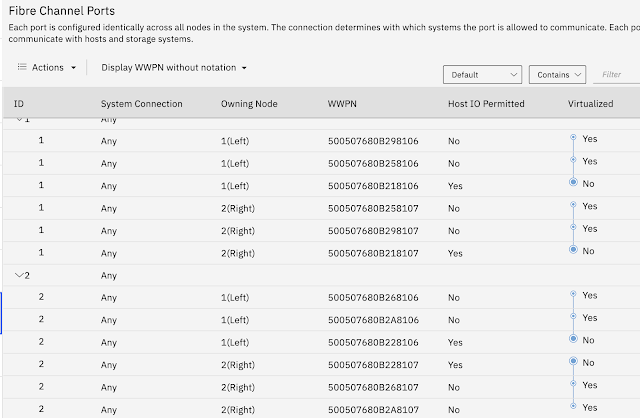

From the diagram we see that each site had two V7000 nodes. Each node had connections to two switches at each site. The switches were connected as pictured in the diagram to create two redundant fabrics that spanned the sites. The customer had hosts and third-party storage also connected to the switches, although the V7000 was not virtualizing the storage. The storage was zoned directly to hosts. Several of these hosts had the same host ports zoned to both the third-party storage and the V7000.

Hyperswap requires isolation of the SVC or FlashSystem node ports used for internode communication to be on their own dedicated fabric. This is called the 'private' SAN. The 'public' SAN is used for host and controller connections to the Spectrum Virtualize and all other SAN devices. This private SAN is in addition to isolating these ports through configuring the port-masking feature. The private fabric can be either virtual (Cisco VSANs or Brocade virtual fabrics) on the same physical switches as the public SAN, or it can be separate physical switches, but it must also include separate ISLs between the sites. The above diagram does not have a private SAN, either physical or virtual. As such, the internode traffic is sharing the same ISLs as the general traffic between the sites. A common related mistake I see is a customer will implement a private SAN using virtual SANs but then allow those to traverse the same physical links as the public SAN. This is not correct. The private SAN must be completely isolated.

This isolation is a requirement for two reasons:

In a standard topology cluster, the keep-alives and other cluster-related traffic that is transmitted between the nodes does not cross ISLs. Best practice for a standard cluster is to co-locate all node ports on the same physical switches. In a Hyperswap configuration, because the cluster is multi-site, internode traffic must be communicated across the ISLs to nodes at each site.

All writes by a host to volumes configured for Hyperswap are mirrored to the remote site. This is done over the internode ports on the private SAN. If these writes cannot get through, then performance to the host suffers as good status for a write will not be sent to the host until the write completes at the remote site.

If the shared ISLs get congested, then there is a risk that the volume mirroring and cluster communications will be adversely impacted. For this customer, that was precisely what happened. The customer had hosts at site 2 that were zoned to both third-party storage and V7000 node ports at site 1. The third-party storage was having severe physical link issues and became a slow drain device. The host communication was impacted enough to eventually congest the ISLs on one of the fabrics. When this happened, nodes began to assert because the internode ports started to become congested due to the Hyperswap writes having to cross a severely congested ISL.

Very Nice Blog David..

ReplyDeleteThank you Sanjay

Delete