Physical Switch SAN Implementation for an SVC Hyperswap Cluster

In February 2020 I wrote this post on the supported SAN design for SVC and Spectrum Virtualize Hyperswap clusters. In that post I covered some of the problems that arise with improper SAN design for SVC clusters in a Hyperswap configuration. The requirement at it's most basic when using Hyperswap is to have completely separate fabrics for private traffic, where the private traffic is used for only the inter-node communication within the cluster and there are one or more public fabrics for everything else. There are various ways that SANs can be implemented to meet that requirement. This is one in a series of blog posts that will discuss some of the options for fabric design within that framework and provide some implementation details on Cisco and Brocade fabrics. I will also show you some of the common mistakes that are made in the SAN implementation. As with that post, while I may only reference SVC in this series (for exanple, the diagram below depicts an SVC cluster) any recommendations made apply equally to SVC, Spectrum Virtualize and IBM FlashSystem products. Also remember that this applies to Stretched clusters as well.

Using Physical Switches

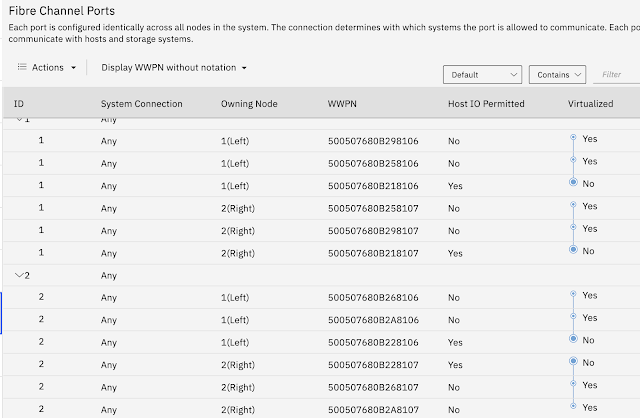

The first design we will look at is the simplest in both concept and execution. You can see in the figure below that there are 8 physical switches in this design.

The

overall SAN consists of 4 two-switch fabrics. There are two redundant private

fabrics for the inter-node ports and there are two redundant public

fabrics for everything else. This diagram includes host and storage related to the cluster, but the public fabrics could include other devices that are not connected to this cluster.

Note the third site for a quorum. You can

also implement the quorum as an IP quorum and not need storage at

third site for a disk-based quorum, however it is recommended that the

quorum be at a third site, even if it is an IP quorum.

Also

note that there are two fabrics on one provider, and two fabrics on a

second provider. The links between the two sites will be

Fibre-Channel over IP (FCIP) or they will be a technology such as DWDM, ONS or simply dark

fibre connections to carry the native Fibre-Channel traffic. Implementing the fabrics in this way by splitting them across the providers provides full redundancy in the event one of the providers has a problem. The next best option would be to use a single provider but ensure that the links between the sites take different routes through the provider's infrastructure.

The above design could use Cisco or Brocade switches. This design has these advantages:

- Simplicity for configuration as each of the 4 fabrics is a basic design

- Since it uses physical switches it eliminates the possibility of sharing the ISLs between the sites with the public and private fabrics. That is a common mistake and future blog posts will have more detail.

The above design is more expensive than the other implementation options given that it requires 8 switches. Because of the expense, this is an atypical design. An alternative design that uses only 4 switches is shown below. This design looks similar to the above. Note the continued presence of the dedicated ISLs between the sites. As before, there are redundant fabrics on two different providers. However, in this new design each SAN switch is configured into logical virtual switches. Cisco calls these VSANs, Brocade calls them Virtual fabrics, but the idea is the same. Each of the virtual switches is a switch unto itself and when linked with other virtual switches will create a virtual fabric (or VSAN) within the physical fabric. These virtual fabrics are distinct entities and have separate zoning, name server databases and nearly everything else that a physical fabric has. The below design is the most common that IBM SAN Central sees. In future blog posts I will show you the most common problem we see and how to avoid it.

Variations on both of the above implementations include having separate public fabrics for

hosts and controllers, or having a third fabric that is dedicated to

replication, if the SVC or Spectrum Virtualize storage system is

replicating data to another cluster. However, the key requirement is

the presence of the dedicated fabric for the inter-node communication.

If that requirement is not met, then the design is invalid.

For information on sizing the inter-site links you can read Jordan Fincher's blog here.

One final note: it is strongly recommended that you do not use Cisco IVR, Brocade LSAN zoning or other fibre-channel routing features on the private fabrics. As the SVC node ports will be the only thing on the private fabrics, letting the fabrics merge between the sites will have minimal effect, even in lower bandwidth environments. Once zoning is configured, it is unlikely to change. There should not be any other fabric changes occurring frequently enough to justify adding IVR or LSAN zoning. Both features increase the complexity of the solution, both for configuration and for troubleshooting. It also introduces a potential failure point.

Hi David

ReplyDeleteWe can implement simple hyperswap with 2 servers and two IBM V5030 directly by FC without any SAN switch???

Only SFPs and Fibers

Thanks

Thanks,

Great post. It’s fascinating to see how technology is evolving so quickly. Looking forward to your next update on emerging trends! We offer

ReplyDeleteOnline Safety Courses with Certificates