I wrote this previous post on the general requirements for SAN Design for Spectrum Virtualize Hyperswap and Stretched clusters. In this follow-on post, we'll look at a sample implementation on a Cisco or IBM C-type fabric. While there are several variations on implementation (FCIP vs Fibre-Channel ISL is one example) the basics shown here can be readily adapted to any specific design. This implementation will also show you how to avoid one of the most common errors that IBM SAN Central sees on Hyperswap clusters - where the ISLs on a Cisco private VSAN are allowed to carry traffic for multiple VSANs.

We will implement the below design, where the public fabric is VSAN 6, and the private fabric is VSAN 5. The below diagram is a picture of one of two redundant fabrics. The quorum that is depicted can be either an IP quorum or a third-site quorum. For the purposes of this blog post, VSAN 6 has already been created and has devices in it. We'll be creating VSAN 5, adding the internode ports to it and ensuring that the Port-Channels are configured correctly. We'll also verify that Port-Channel3 on the public side is configured correctly to ensure VSAN 5 stays dedicated as a private fabric. For the examples below, Switch1 is at Failure Domain 1. Switch 2 is at Failure Domain 2.

|

Hyperswap SAN Design

|

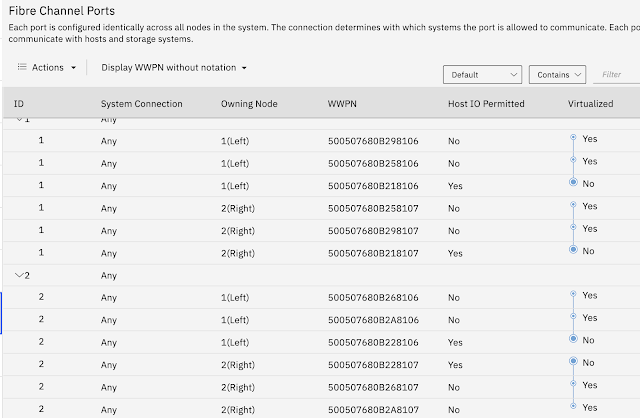

Before we get started, the Spectrum virtualize ports should have the local port mask set such that there is at least 1 port per node per fabric dedicated to internode. Below is the recommended port masking configuration for Spectrum Virtualize clusters. This blog post assumes that has already been completed.

|

Recommended Port Masking

|

Now let's get started by creating the private VSAN:

switch1(config)# conf t

switch1(config)# vsan database

switch1(config-vsan-db)# vsan 5 name private

switch2(config)# conf t

switch2(config)# vsan database

switch2(config-vsan-db)# vsan 5 name private

Next, we'll add the internode ports for our cluster. For simplicity in this example, we're working with a 4 node cluster, and the ports we want to use are connected to the first two ports of Modules 1 and 2 on each switch. We're only adding 1 port per node here. Remember that there is a redundant private fabric to configure which will have the remaining internode ports attached to it.

switch1(config)# conf t

switch1(config)# vsan database

switch1(config-vsan-db)# vsan 5 interface fc1/1, fc2/1

switch2(config)# conf t

switch2(config)# vsan database

switch2(config-vsan-db)# vsan 5 interface fc1/1, fc2/1

Next we need to build the Port-channel for VSAN 5. Setting the trunk mode to 'off' ensures that the port-channel will only carry traffic from the single VSAN we specify. For Cisco 'trunking' means carrying traffic from multiple VSANs. By turning trunking off, no other VSANs can traverse the port-channel on the private VSAN. Having multiple VSANs traversing the ISLs on the private fabric is one of the most common issues that SAN Central finds on Cisco fabrics. This is because trunking is allowed by default, and adding all VSANs to all ISLs is also a default when ISLs are created We also will set the allowed VSANs parameter to only allow traffic for VSAN 5. Lastly to keep things tidy we'll add the port-channel to VSAN 5 on each switch

switch1(config)# conf t

switch1(config)# int port-channel4

switch1(config-vsan-db)# vsan 5 interface port-channel4

switch1(config)# conf t

switch1(config)# int port-channel4

switch1((config-if)# switchport mode E

switch1((config-if)# switchport trunk mode off

switch1((config-if)# switchport trunk allowed vsan 5

switch1((config-if)# int fc1/14

switch1((config-if)# channel-group 4

switch1((config-if)# int fc2/14

switch1((config-if)# channel-group 4

switch1((config-if)# vsan database

switch2(config)# conf t

switch2(config)# int port-channel4

switch2(config-vsan-db)# vsan 5 interface port-channel4

switch2(config)# conf t

switch2(config)# int port-channel4

switch2((config-if)# switchport mode E

switch2((config-if)# switchport trunk mode off

switch2((config-if)# switchport trunk allowed vsan 5

switch2((config-if)# int fc1/14

switch2((config-if)# channel-group 4

switch2((config-if)# int fc2/14

switch2((config-if)# channel-group 4

switch2((config-if)# vsan database

The next steps would be bring up Port-Channel 4 and the underlying interfaces on Switch 1 and Switch 2, ensure the VSANs have merged correctly and lastly zone the Spectrum Virtualize node ports together.

We also need to examine Port-channel 3 on the public fabric to ensure it is not carrying traffic for the private VSAN. To do this:

switch1# show interface port-channel3

......

......

Admin port mode is auto, trunk mode is auto

Port vsan is 1

Trunk vsans (admin allowed and active) (1,3,5,6)

Unlike the private VSAN, the trunk mode can be in auto or on. This is the public VSAN so there may be multiple VSANs using this Port-Channel. The problem is highlighted in red. We are allowing private VSAN 5 to traverse this Port-Channel. This must be corrected, using the commands above that set the trunk allowed parameters. Your vsans allowed statement would include all of the current VSANs except

VSAN 5. On a side note, it is a good idea to review which VSANs are allowed to be trunked across port-channels or ISLs. Allowed VSANs that are not defined on the remote switch for a given ISL will show up as Isolated on the switch you run the above command on. The only VSANs that should be allowed are the ones that should be running across the ISL. You would need to perform the same check on Port-Channel 3 on Switch 2.

Lastly, the above commands can be used for FCIP interfaces or standalone FC ISLs. You would just substitute the interface name for port-channel4 in the above example. A note for standalone ISLs is that it is recommended that they be configured as port-channels. You can read more about that

here.

I hope this answers your questions and helps you with implementing your next Spectrum Virtualize Hyperswap cluster. If you have any questions find me on LinkedIn or Twitter or post in the comments.

Comments

Post a Comment