An Easy Way To Turn Your Flash Storage Supercar Into a Yugo

Introduction

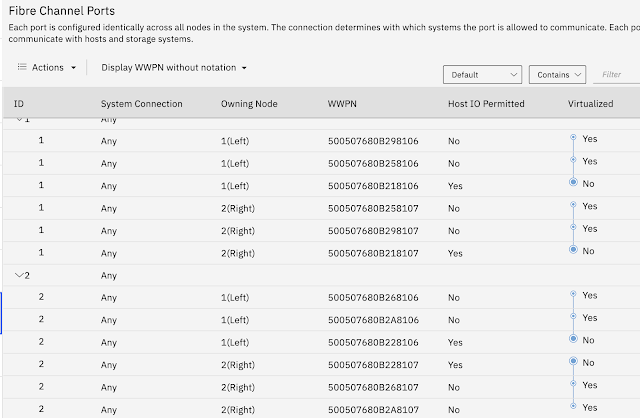

This past summer I was brought into a SAN performance problem for a customer. When I was initially engaged on the problem, it was a host performance problem. A day or two after I was engaged, the customer had an outage on a Spectrum Scale cluster. That outage was root-caused to a misconfiguration on the Spectrum Scale cluster where it did not re-drive some I/O commands that timed out. The next logical question was why the I/O timed out. Both the impacted hosts and Spectrum Scale cluster used an SVC cluster for storage. I already suspected the problem was due to an extremely flawed SAN design. More specifically, the customer had deviated from best-practice connectivity and zoning of his SVC Cluster and Controllers. A 'Controller' in Storwize/SVC-speak is any storage enclosure - Flash, DS8000, another Storwize product such as V7000, or perhaps non-IBM branded storage. In this case, the customer had 3 Controllers. Two were IBM Flash arrays, for the purposes of this blog post we will focus on those and how the customer SAN design negatively impacted their IBM Flash systems.

Best-Practice SVC/Storwize SAN Connectivity and Zoning

The figure below depicts best-practice port connectivity and zoning for SVC and Controllers on a dual-core Fabric Design. (This assumes you have two redundant fabrics, each of which is configured like the below). As we can see ideally our SVC Cluster and controllers are connected to both of our core switches. A single-core design obviously does not have the potential for mis-configuration since all SVC and Controller ports on a given fabric are connected to the same physical switch. A mesh design we would want to use the same basic principles of connecting SVC and controller ports to the same physical switch(es). Zoning must be configured such that the SVC ports on each switch are zoned only to the Controller ports attached to the same switch. The goal is to avoid unnecessary traffic flowing across the ISL between the switches. In the example below, we have two zones. Zone 1 includes the SVC and Controller ports attached to the left-most switch. Zone 2 includes the SVC and controller ports attached to the right-most switch.

Customer Deviations from Best-Practice on a Dual-Core Fabric

The next figure is the design the customer had. The switches in question are Brocade-branded, but the design would be flawed regardless of the switch vendor. The problem should be be obvious. With the below design, all traffic moving from the SVC to the backend controllers has to cross the ISL, in this case it was a 32 Gbps trunk. The switch data showed multiple ports in the trunk were congested - there were transmit discards and timeouts on frames moving in both directions, and both switches were logging bottleneck messages on the ports in the trunk. The SVC was logging repeated instances of command timeouts and errors indicating it was having problems talking to ports on the Controllers. Lastly, the SVC was showing elevated response times to the Flash storage. All of this was due to the congested ISL. With this design, the client was not getting the ROI or response times it should have been getting from the Flash storage. Of course all of the error correction and recovery caused an increased load on the fabric and re-transmission of frames which made an already untenable situation worse. The immediate fix to provide some relief was to double the bandwidth of the ISL on both fabrics. The long-term fix was to re-connect ports and zone appropriately to get to best-practice.

Customer Host Connectivity and a Visual of the Effect on the Fabric

The last figure shows the customer host connectivity and the effect on the fabric of this flawed fabric design. We can see from the figure that the client had both the underperforming hosts and the GPFS/Spectrum Scale cluster connected to DCX 2 where the Controllers were connected. With this design, we can see that data must traverse the ISL 4 times. Traffic on the ISLs could be immediately reduced by half by moving half of the SVC ports to DCX2 and half the controller ports to DCX1 and then zoning to best practice as in the first figure in this blog post. In addition to the unnecessary traffic on the congested ISL, redundancy is reduced since this design is vulnerable to a failure of either DCX 1 or DCX 2. While the client did have a redundant fabric, a failure of either of those switches means a total loss of connectivity from SVC to Controllers on one of the fabrics. That is significant. ISL traffic could be further reduced (and reliability increased at the host level) by moving half of the GPFS cluster (and other critical host ports) to DCX 1 and zoning appropriately. In this way, the only traffic crossing the ISLs would be hosts or other devices that don't have enough ports to be connected to both cores and whatever traffic is necessary to maintain the fabric. Both the SVC to Controller and the host to SVC traffic would then be much less vulnerable to any delays on the ISLs or congestion in either fabric.

Comments

Post a Comment