Troubleshooting SVC/Storwize NPIV Connectivity

Some Background:

A few years ago IBM introduced the virtual WWPN (NPIV) feature to the Spectrum Virtualization (SVC) and Spectrum Storwize products. This feature allows you to zone your hosts to a virtual WWPN (vWWPN) on the SVC/Storwize cluster. If the cluster node has a problem, or is taken offline for maintenance the vWWPN can float to the other node in the IO Group. This provides for increased fault tolerance as the hosts no longer have to do path failover to start I/O on the other node in the I/O group.

All of what I've read so far on this feature is from the perspective of someone who is going to be configuring this feature. My perspective is different, as I troubleshoot issues on the SAN connectivity side. This post is going to talk about some of the procedures and data you can use to troubleshoot connectivity to the SVC/Storwize when the NPIV feature is enabled, as well as some best-practice to hopefully avoid problems.

If you are unfamiliar with this feature, there is an excellent IBM RedPaper that covers both this feature and the Hot Spare Node feature:

An NPIV Feature Summary

1: SVC/Storwize has three modes for NPIV - "Enabled", "Transitional" or "Off".

2: Enabled means it is enabled. Hosts attempting to log into to the physical WWPN (pWWPN) of the Storwize port will be rejected. Transitional means it is enabled, but the SVC/Storwize will accept logins to either the vWWPN or the pWWPN. Off means the feature is not enabled.

3: Transitional mode is not intended to be enabled permanently. You would use it while you are in the process of re-zoning hosts to use the vWWPNs instead of the pPWWNs.

4: For the NPIV failover to work, each of the SVC/Storwize nodes has to have the same ports connected to each fabric. For example, assuming this connection scheme for an 8-port node with redundant fabrics:

| Node Port | Fabric A | Fabric B |

| 1 | x | |

| 2 | x | |

| 3 | x | |

| 4 | x | |

| 5 | x | |

| 6 | x | |

| 7 | x | |

| 8 | x |

All the nodes must follow the same connection scheme. Hot-spare node failover will also fail if the nodes are mis-cabled. To be clear I am not advocating the above scheme per se, just that all the nodes must match as to which ports are connected to which fabrics.

5: I was asked at IBM Tech U in Orlando if the SVC/Storwize Port Masking feature is affected by the NPIV feature. The answer is no. Any existing port masking configuration is still in effect.

6: pWWPNs are used for inter-node and inter-cluster (replication) as well as controller/back-end. vWWPNs are only used for hosts.

A Suggestion: A recommendation I heard at IBM Technical University in May is if you are using FC Aliases in your zoning to add the vWWPN to existing alias for each SVC/Storwize cluster port so that you don't have to rezone each individual host. While that is an easy way to transition, that creates a potential problem. After you move the cluster from Transitional to Enabled, the cluster starts rejecting the fabric logins (FLOGI) to the pWWPNs. At best, all this does is fill up a log with rejected logins, at which point you call for support because you notice a host logging FLOGI rejects. At worst this causes actual problems when the adapter and possibly multipath driver attempt to deal with the FLOGI rejects. Prior to moving the NPIV mode to Enabled, you need to remove the pWWPN from the FC Alias, but you must first ensure you are not using the same aliases for zoning of your back-end storage. If you are and you remove the pWWN from the alias you will lose controller connectivity. If you are using a Storwize product with internal storage and no controllers, then this will not be an issue and the pWWN can be removed from the alias. If you do have back-end storage and are currently using the same aliases for both host and controller zoning, it might be easier to establish new aliases for the pWWPNs and rezone the controllers to them, or just rezone the controllers to the pWWPNs before modifying the existing aliases to use the vWWPNs. It will be less zones to modify for the controllers than for the hosts.

Troubleshooting Connectivity to the SVC/Storwize Cluster

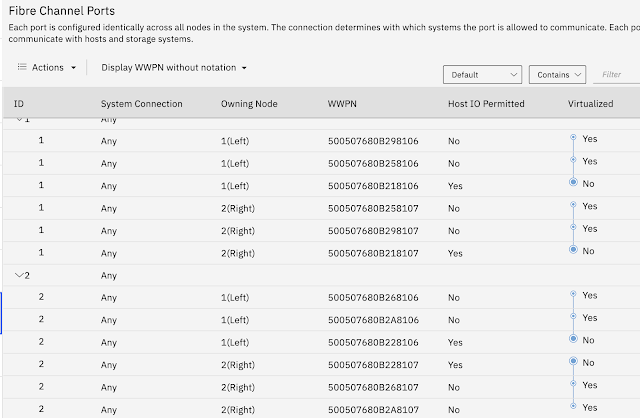

One of the most common problems that I see with connectivity issues or path failover not working as it should is incorrect zoning. To that end, you first need to verify the vWWPNs that you should be using. The easiest way is to run lstargetportfc on the Cluster CLI to get a listing of these vWWPNs. lsportfc will list the pWWPNs. This command output is included by default in the svcout file starting at version 8.1.1. Versions prior to that it is a separate command. Once you have that list, you can use the Fibre Channel Connectivity Listing in the SVC/Storwize GUI and the Filtering capabilities there to filter on the vWWPNs and/or the pWWPNs to determine if you have any hosts connected to the pWWPNs. You can also capture the output of

lsfabric --delim , and import that CSV into Excel or similar to get better sorting and filtering than the System GUI. If the host is missing, or is connected to the pWWPNs, you will need to check and verify zoning. This is also a good time to verify controllers are connecting to the pWWPNs, and that if you are using a hot-spare node, that the controllers are zoned to the ports on the hot-spare. I had a case recently where, while it wasn't the reason the customer opened the ticket, I noticed they had not zoned one of their controllers to the hot-spare node. In the event of a node failure, the failover would not have worked as expected.

Comments

Post a Comment